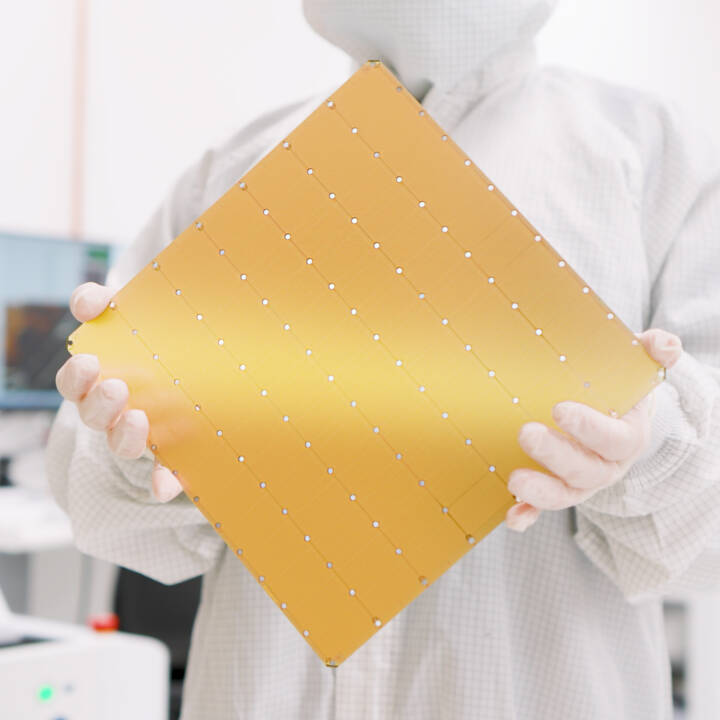

California-based AI supercomputer firm Cerebras has unveiled the Wafer Scale Engine (WSE-3), its latest artificial intelligence (AI) chip with a whopping four trillion transistors, making it double the performance of its previous generation, WSE-2, while consuming the same amount of power. The WSE powers the Cerebras CS-3 AI supercomputer with a peak performance of 125 petaFLOPS.

The WSE-3 is currently the largest single chip in the world. It is a square-shaped design with 21.5 centimeters to a side, each chip made by nearly an entire 300-millimeter wafer of silicon.

The WSE-3 uses the 5 nm architecture and delivers 900,000 cores optimized to process AI data when it is used in the CS-3, the Cerebras’ AI supercomputer, which has a 44GB on-chip SRAM.

To store all that data, external memory options include 1.5 TB, 12 TB, or a massive 1.2 Petabytes (PB), which is 1,200 TB, depending on the requirement of the AI model being trained. The CS-3 can store up to 24 trillion parameters in a single logical memory space and does not require a partition or refractor. When set up at the 2048 system configuration, the CS-3 can fine-tune AI models with 70 billion parameters per day.

Although AI models have taken the world by storm with their immense capabilities, tech companies know that AI models are still in their infancy and need further development. With the WSE-3, Cerebras aims to top the performance by 57-fold when compared with the chip maker Nvidia’s H200 which has 80 billion transistors.

In addition, Cerebras has ensured that its newest chips can deliver twice the performance without any increase in size or power consumption. Meanwhile, when compared to GPUs, the AI-specific chip is also reported to require 97 percent less code to train large language models (LLMs).

To date, the WSE-3 is planned to deploy at the Argonne National Laboratory and the Mayo Clinic, aiming to further the research capabilities at these institutions.

Furthermore, the AI supercomputer firm has entered into a joint development agreement with Qualcomm, which will serve as an inferencing partner, aiming to boost a metric of price and performance for AI inference 10-fold.