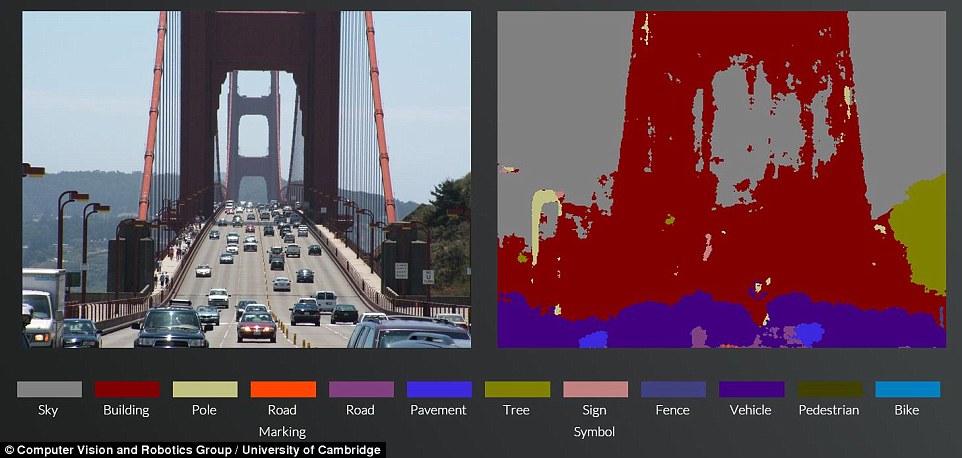

SegNet is a new automated system created by the University of Cambridge, which can predict and “read” a road and various street or road characteristics like buildings, road signs, as well as people and other vehicles. The first look at the system will remind you of RGB images. These images show the road and each of the characteristics, defined by different colors. These colors are used for classification purposes by the system to differentiate between layers. The system employs the qualities of a Bayesian analysis of the road scene.

Researchers have provided a free online demonstration for anyone who wants to experience the system for themselves. Although the system is, at the moment, unable to work with a driverless car, its ability to “see” and evaluate road conditions, and accurately identify where it is going and what it is looking at, is an important part of any future decisions of its incorporation in a driverless car.

The SegNet system allows you to upload an image onto its online software. The image can be of any place in the world, and the system will accurately define and differentiate between characteristics that can affect the driving experience. The system has been tested on roads as well as highways. It has been able to predict the road components correctly every time.

The function of the SegNet system is to take an image and classify the 12 basic components into their categories, each one of which is identified by a different color. The system works perfectly in every light condition and identifies 90% of pixels correctly. Previous (and expensive) radar-based or laser-based systems have not been able to produce this level of accurate results in the past.

The system was initially tested in urban areas and highways. Therefore, it still has some necessary upgrades left when it comes to predicting the road components in rural, snowy, and mountainous regions or environments.

The leader of the research, Professor Roberto Cipolla said, “Vision is our most powerful sense and driverless cars will also need to see. But teaching a machine to see is far more difficult than it sounds. In the short term, we’re more likely to see this sort of system on a domestic robot – such as a robotic vacuum cleaner, for instance. It will take time before drivers can fully trust an autonomous car, but the more effective and accurate we can make these technologies, the closer we are to the widespread adoption of driverless cars and other types of autonomous robotics.”

The technology will be presented at the International Conference on Computer Vision in Santiago, Chile.