Neural networks are computer software and systems modeled on the human brain and nervous system. They are used for deeply structured learning which employs sets of algorithms to model high-level concepts normally abstracted from data by the human brain.

Due to today’s exponential increase in data, the human brain is simply overwhelmed, and computer systems are needed to crunch information and create an output of value to humans. Deep learning systems, the most advanced form of machine learning, use many processing layers to model the complexity of the human brain.

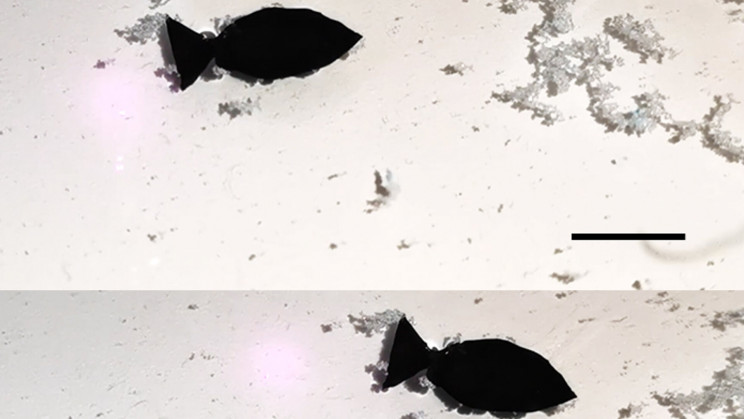

One area in which deep learning is needed is providing computer devices with human-level vision capabilities.

Embedded Neural Network Compute Framework

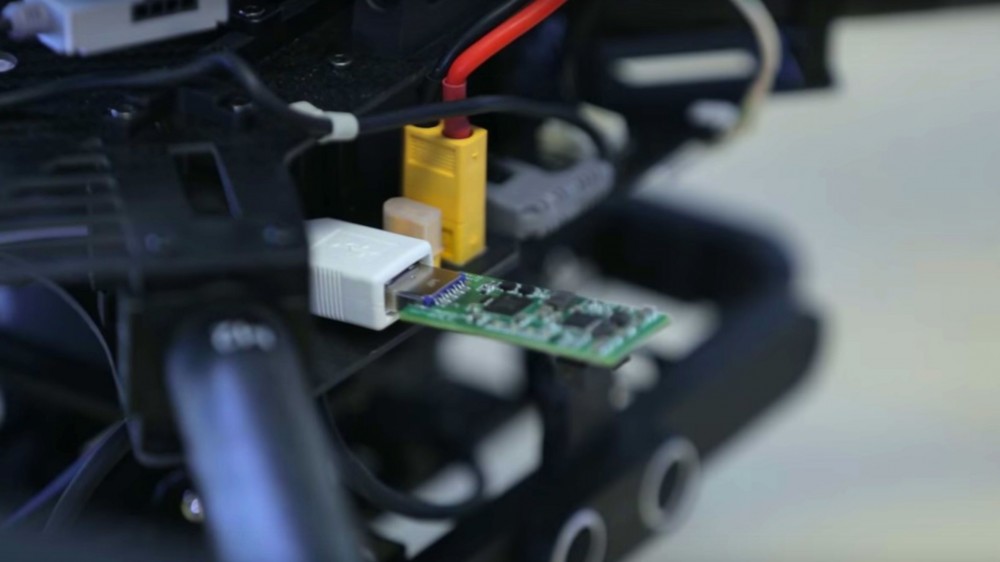

Movidius, a leader in high performance and high-efficiency machine vision technology and creator of the Myriad 2 processor for vision applications, just announced its new deep learning chip, housed in a USB drive, called the Fathom Neural Compute Stick (FNCS).

Fathom is capable of running on the power available on mobile devices and does so because of Movidius’ Vision Processing Unit (VPU) capabilities. The VPU houses camera interfaces, vector VLIW (“Shave”) processors, atomic imaging vision engines, and an intelligent memory fabric. Movidius offers vision software libraries for developers to create new applications using the VPU.

One example of an application built on the FNCS is an object recognition software that will be installed on smartphones and allow objects to be identified and categorized.

Following is an introduction to the Fathom Neural Compute Stick by Movidius.

The following video explains how Movidius’ mission is to bring human-level vision to connected devices.