Researchers at Cornell are pioneering two innovative technologies capable of monitoring eye movements and interpreting facial expressions using sonar-like sensing. These advancements are compact enough to integrate seamlessly into mainstream smartglasses, virtual reality (VR), or augmented reality (AR) headsets. Notably, they offer a substantial reduction in power consumption compared to camera-based counterparts.

Privacy-Preserving Technology

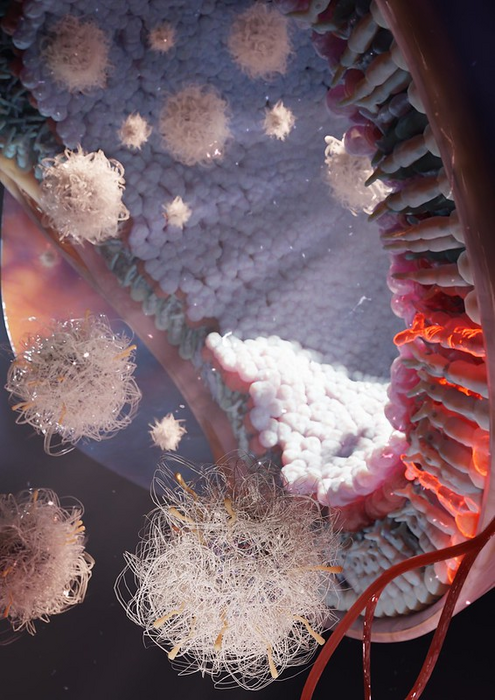

Imagine smartglasses that can understand your focus and even your emotions without the need for intrusive cameras. That’s the potential of these new technologies, named GazeTrak and EyeEcho. GazeTrak acts as the first eye-tracking system to rely on sound. It uses speakers and microphones embedded in the glasses’ frame to emit inaudible sound waves that bounce off your eyes. By analyzing the way the sound waves reflect, the system can determine where you’re looking. EyeEcho takes things a step further. It can not only track eye movement but also interpret facial expressions, similarly using sound waves to analyze the subtle changes in the shape of your face, enabling the system to recreate your expressions on a virtual avatar in real-time.

Enhanced User Experience:

The benefits of this technology are two-fold. First, it offers a significant boost to privacy. By eliminating cameras, there’s no need to worry about your every glance or expression being recorded. Second, this approach uses considerably less power than camera-based systems, which can significantly improve battery life in smartglasses and VR headsets. This innovation from Cornell paves the way for a new generation of smartglasses that are not only more user-friendly but also more power-efficient.

In addition to the aforementioned systems, Cornell scientists have also developed another groundbreaking face-reading sonar technology called EchoSpeech. This innovative tool can be seamlessly integrated into smartglasses, enabling it to monitor the wearer’s lip movements and interpret silently spoken words.