Robot Mental Models & “Belief Systems”

The challenge for roboticists is to create ever larger numbers of tasks that robots can complete without human intervention. A robots is continually taking stock of its environment and making best guesses as to “what is what” and “where is where”. The main sticking point seems to be improving the accuracy of a robot’s perception of its environment. In essence, a robot must have an accurate mental model and “belief system,” just like humans, in order to navigate reality successfully.

According to Shayegan Omidshafiei, a graduate student at MIT, the challenge is to develop and test an ever-growing number of algorithms to see how they work in real world scenarios. As many engineers know from experience, sensor measurements have their limitations and can be incomplete or distorted. When this occurs robots are “thrown off their game.”

Complicating Matters: Swarm Robots & Their Decision Making Processes

When engineers deal with complex systems of many robots, their interaction and behavior becomes much more indecipherable and as difficult to understand as complex human relationships. Engineers walk away from a testing session scratching their heads asking “why did these algorithms produce that behavior.”

Autonomous Robot “Mind Reading”

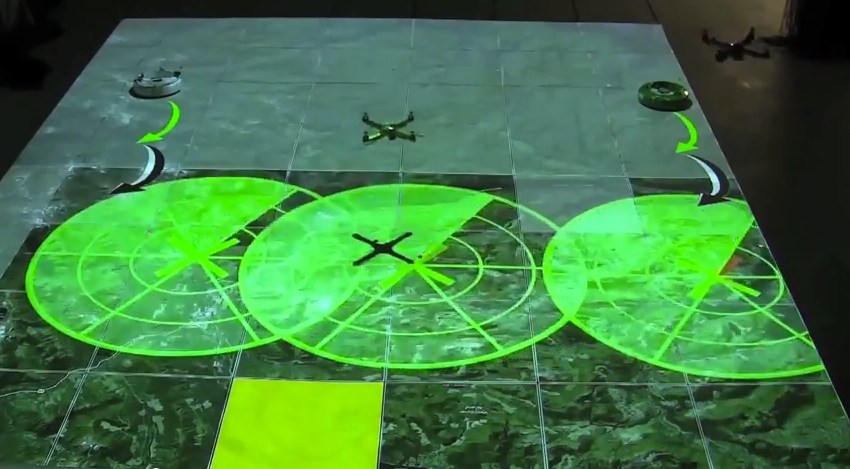

According to Omidshafiei and his colleague Ali-Akbar Agha Mohammadi a post-doctoral student at MIT, the way forward is to test algorithms in a variety of virtual reality environments. To do this they have developed a projection system which creates the simulated environment and captures motion in it.

The projection system is a “measurable virtual reality system” and the current version uses a ceiling-mounted motion capture system and lasers to track movement of ground or aerial robots in 3D. This setup allows for the fast prototyping of robots in what is termed a “cyberphysical system.” This system speeds up the design of learning algorithms, perception algorithms and planning algorithms for autonomous systems.

Current designs of algorithms for swarms of robots perform best when a single, central “leader agent” robot controls all the other robots or agents in the system. However, when swarms become large, it is necessary for the leader agent to delegate the assignment of tasks to other agents.

This new virtual system is allowing Omidshafiei and colleagues to better understand and keep track of communication between leader agents and other agents so that the movement and behavior of each robot can be more fully and accurately understood.

Related articles on IndustryTap:

- Autonomous Cars Could Potentially Save The United States an Astronomical Amount of Money

- RFID and Near Field Communications Help Advance Autonomous Robot Behavior

- Audi Awarded First Autonomous Driving Permit In The State of California

- What’s Driving the Future of Autonomous Cars and Highways?

- Autonomous Undersea Vehicles (AUVs) Unveil Ocean’s Mysteries

- RC Vehicles Take Autonomous Flight with ArduPilot Mega Project

- Robobees: Building an Autonomous Colony of Flying Microbots

- Shark Tries To Eat The Robot That’s Been Following It Around…

- Elon Musk Says Next Year’s Tesla Cars Will Be Capable of Driving Themselves 90% of the Time

- Tesla’s New Autopilot System Will Let You Sleep Behind The Wheel

References and related links: